Tag: AI

-

Will new models like DeepSeek reduce the direct environmental footprint of AI?

I’m in a chat at work, and recently this question came up: Are folks expecting a reduction in energy demands if DeepSeek-style models become dominant vs the ones you see from Open AI? I’ve paraphrased it slightly, but it’s an interesting question, so rather than obnoxiously share a long answer into a that chat, I…

-

Do tech firms need to report their revenue from the oil and gas sector now?

At work, we’re building some open source software to make it possible to parse corporate disclosures that a growing number of companies need to publish for the world to see. These are largely being driven by new laws passed around the world about corporate transparency, and I’m sharing a question here that I’d like some…

-

How I use LLMs – neat tricks with Simon’s `llm` tool

Earlier this year I co-authored a report about the direct environmental impact of AI, which might give the impression I’m massively anti-AI, because it talks about the signficant social and environmental of using it. I’m not. I’m (still, slowly) working through the content of the Climate Change AI Summer School, and I use it a…

-

Revisiting H2-powered datacentres

This is a follow-on post from an earlier post I dashed out – Hydrogen datacentres – is this legit? – where a podcast interview caught my attention about one approach being sold to address demands on the grid caused by new datacentres. This post will make more sense if you have read it. Basically ECL…

-

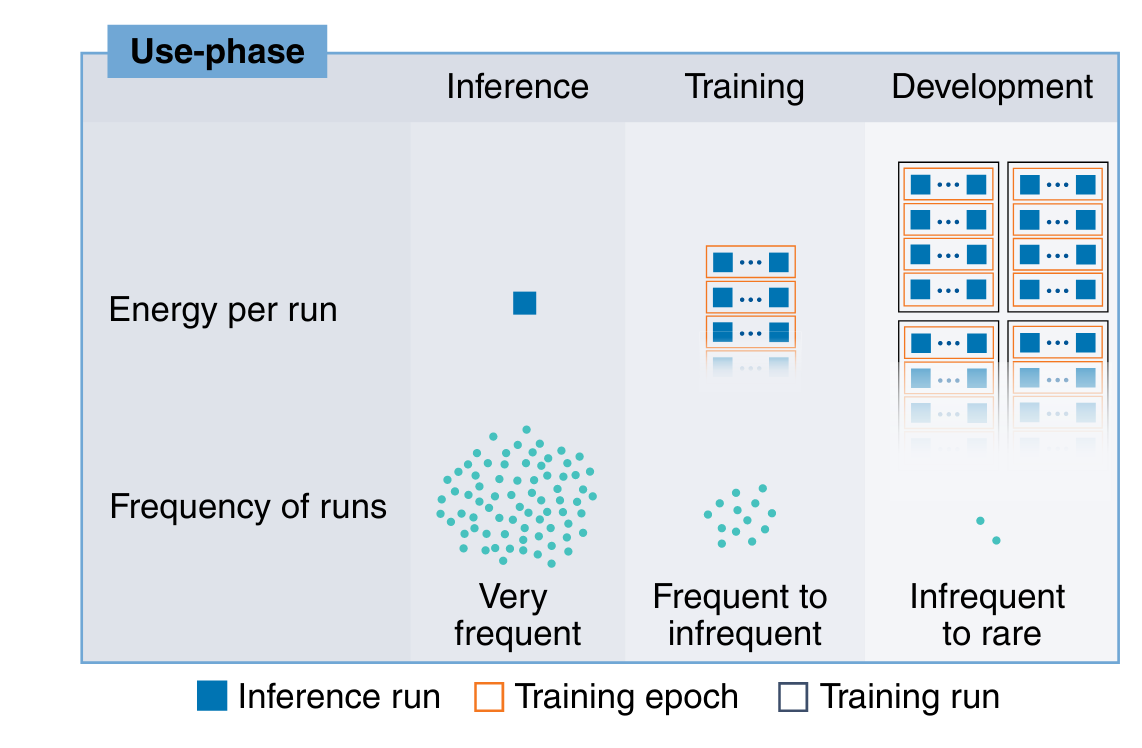

Does the EU AI Act really call for tracking inference as well as training in AI models?

I’m sharing this post as it I think it helped me realise something I hadn’t appreciated til today. I don’t build AI models, and to be honest, while I make sparing use of Github Co-pilot and Perplexity, I’m definitely not a power user. My interest in them is more linked to my day job, and…

-

TIL: training small models can be more energy intensive than training large models

As I end up reading more around AI, I came across this snippet from a recent post by Sayah Kapor, which initially felt really counter intuitive: Paradoxically, smaller models require more training to reach the same level of performance. So the downward pressure on model size is putting upward pressure on training compute. In effect,…

-

How do I track the direct environmental impact of my own inference and training when working with AI?

I can’t be the only person who is in a situation like this: These feel like things you ought to know before you start a project, to help decide if you even should go ahead, but that for many folks, that ship has sailed. So, I’ll try unpacking these points, and share some context that…